How Not Diamond works

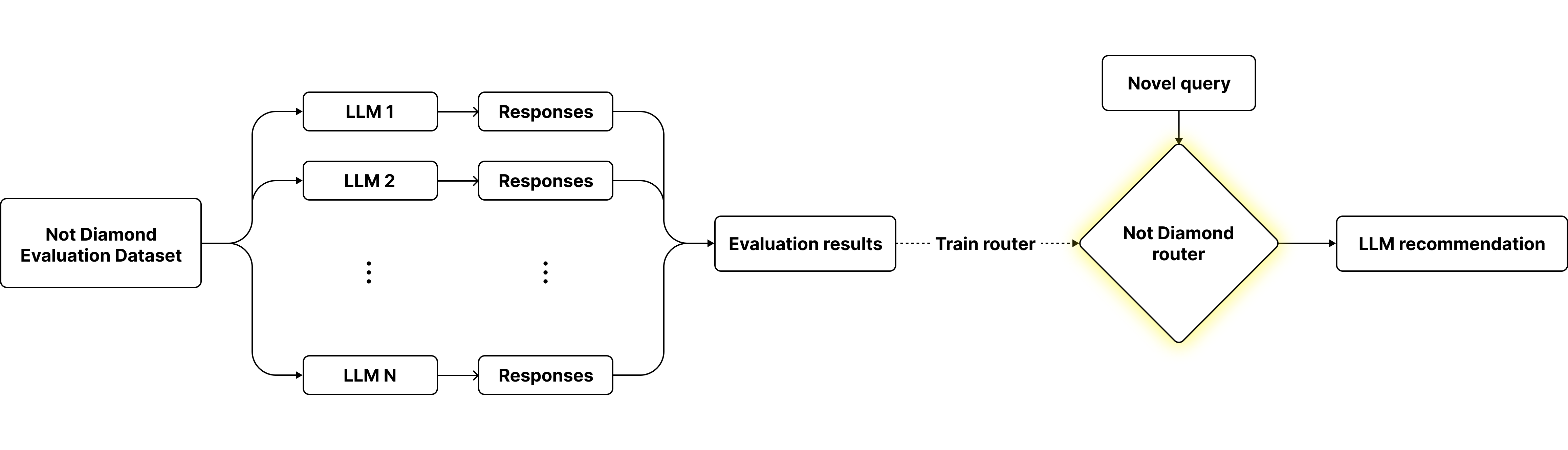

For any distribution of data, rarely will one single model outperform every other model on every single query. Not Diamond works by combining together multiple models into a "meta-model" that learns when to call each LLM, outperforming every individual model’s performance and driving down costs and latency by leveraging smaller, cheaper models when doing so doesn't degrade quality.

To determine the best LLM to recommend for a given query, we have constructed a large evaluation dataset which evaluates the performance of each LLM on everything from question answering to coding to reasoning. Using this approach, we train a powerful ranking algorithm that determines which LLM is best-suited to respond to any given query.

Meanwhile, our custom router training interface works by allowing you to provide the evaluation data on the left side of the above diagram. Not Diamond then trains a custom router optimized for your use case.

Meanwhile, our custom router training interface works by allowing you to provide the evaluation data on the left side of the above diagram. Not Diamond then trains a custom router optimized for your use case.

Updated about 2 months ago